Overview

The tool automates the generation of Smart Data Models (SDM) from visual models, bridging the gap between high-level domain design and technical implementation for Digital Twins and IoT ecosystems.

Technical Workflow

-

Input: Users define domain entities and relationships using B-UML (a simplified UML dialect) within the BESSER Pearl editor.

-

Transformation: The engine maps these models to the NGSI-LD standard and Schema.org vocabularies.

-

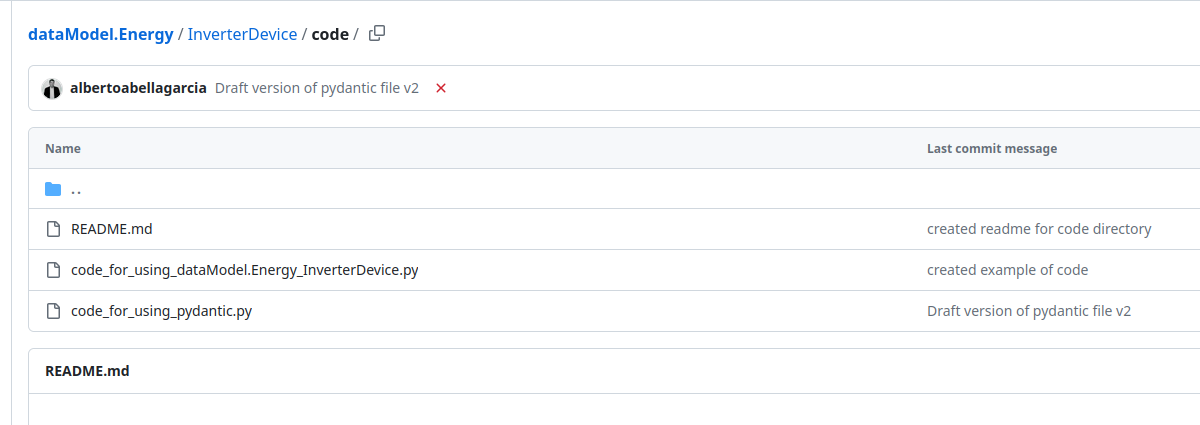

Output: For every entity, it automatically generates a compliant folder containing:

-

schema.json: The technical JSON Schema definition. -

Examples: Multi-format payloads including JSON-LD, NGSI-v2, and Normalized NGSI-LD.

-

Documentation: Automatically derived human-readable specifications.

-

Key Advantages

-

Interoperability: Ensures 100% compliance with ETSI NGSI-LD and SDM contribution guidelines.

-

Model-Driven Engineering (MDE): Moves the “source of truth” to a visual model, reducing manual coding errors in complex JSON-LD structures.

-

Efficiency: Accelerates the deployment of standardized data spaces by automating the boilerplate required for context brokers (e.g., Orion).